Ai-MicroCloud®

Ai Platform-as-a-Service

Powering Business Innovation

Portability

- AWS, Azure, GCP in under 15 min

- On-premise in under 30 min

- Multi-cloud

- Cloud-2-edge

- Build-once-run-ai-anywhere

Productivity

- Modelers launch workstation in seconds

- Ops deploy services in minutes

- Ops model serve vision, LLM, NLP, and other models in minutes

- Support for heterogeneous chips

Composability

- Plug n play third party tools in minutes

- Plug n play for HPC and addon orchestration

- Ready to consume foundational models

Scalability

- Uniquely combine ML Ops & Infra

- Scale up for model fine-tuning

- Scale up inference deployment from Cloud to thousands of Edge locations

Collaboration & Reuse

- Product, Modeler, Ops roles

- Aggregate Ai assets for reuse

- Snap shot training environment

- Automate deployment

- Rapidly build and deploy GenAi, ML, and DL applications

Enterprise Integration

- With enterprise ML Ops

- With enterprise Dev Sec Ops

- With developer tool chains

- Python SDK for automation

- CLI for model serving

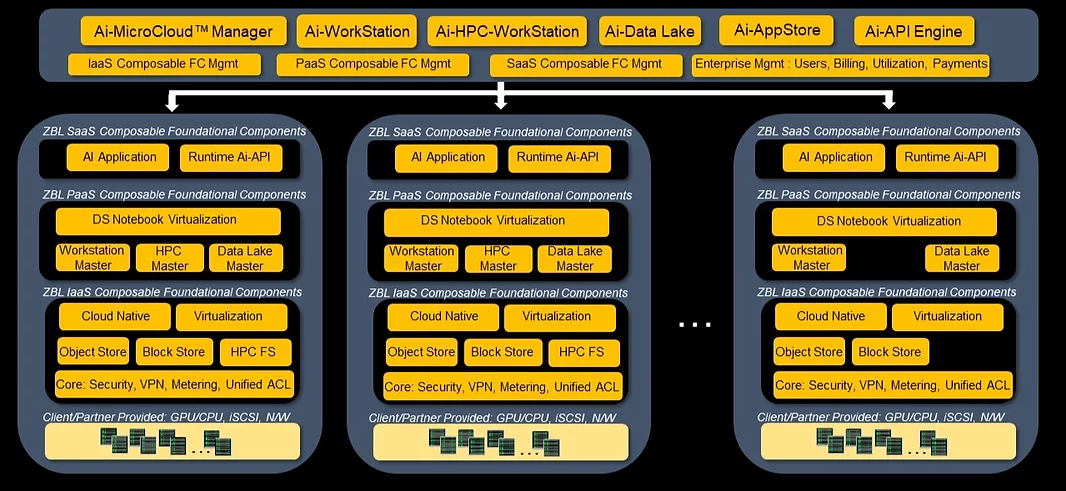

Architecture Diagram

Composable Foundational Components

SaaS Components

Ai-WorkStation & Ai-HPC-WorkStation

A productive web-based developer environment for Ai model trainers with dynamically provisioned resources. Best way to rapidly create end-to-end Ai pipelines on heterogeneous chip architectures.

Data Science

A versatile web-based digital asset repository for ready-to-use Ai inference engines from various sources. Can launch pre-existing Ai-model training environments and inference engines.

Product Management

A scalable web-based ML Ops env. for packaging and publishing Ai inference engines as a secure network asset to Edge. Enabling deployment, providing a consistent publishing mechanism.

PaaS Components

Microservice Manager

Microservice Manager efficiently delivers Ai applications seamlessly across hybrid-cloud, multi-cloud environments, and Edge data centers in compliance with customer policies

Ai-WorkStation Manager

Ai-WorkStation Manager enables zero learning curve, open-source Ai frameworks, curated Ai applications, other end-to-end Ai pipelines as virtualized notebooks while increasing shareability

Ai-HPC Manager

Ai-HPC Manager seamlessly scales to high-performance computing (HPC), crossing physical server boundaries for Ai model training and optimization, using familiar and consistent user experience

Ai-Database Manager

Ai-Data Lake Manager improves productivity and data handling for model training and inference engine optimization, while accelerating data manipulation tasks when GPUs are available within the underlying Ai-MicroCloud® environment

IaaS Components

RBAC Manager

Role-based access control (RBAC) restricts network access based on a person's role within an organization and/or Ai project team. It enables advanced access control for all SaaS and PaaS application components within the platform

Security Manager

A comprehensive security capability maps users seamlessly and securely between web-domain and meta-scheduled infrastructure across hybrid-cloud environments. Integration with enterprise LDAP and/or Active Directory makes it easy to bring consistent user and group policies, including single sign-on and multi-factor authentication.

Multi-cloud Orchestration

A scalable web-based ML Ops env. for packaging and publishing Ai inference engines as a secure network asset to Edge. Enabling deployment, providing a consistent publishing mechanism.

©️ Zeblok Computational Inc. 2022